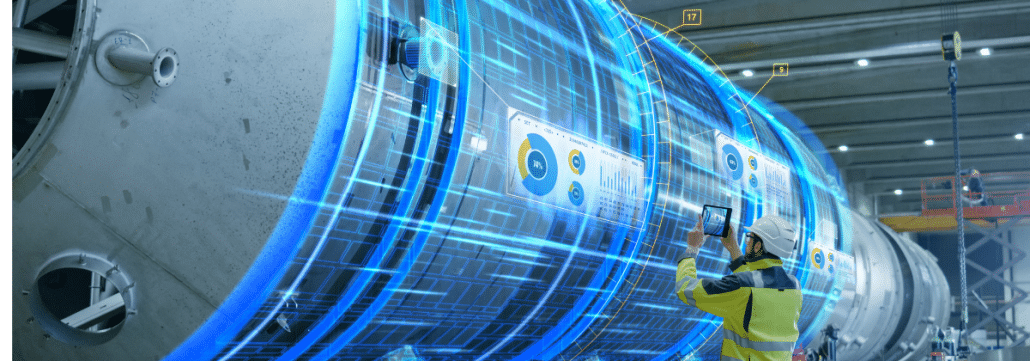

Predictive analytics use cases are validating its value proposition and driving increased investments in artificial intelligence (AI) and machine learning (ML). Real-world examples in manufacturing and other asset-intensive industries demonstrate the technology’s ability to study data patterns in systems, sensors, and processes; automatically detect anomalies; and proactively predict failures and inefficiencies in time to make corrections.

What is not well understood is that ML models require ongoing care and feeding. There is generally an unfounded expectation that ML initiatives are implemented in project form, on a finite timescale. In reality, model implementation is never “done” because operational objectives, connected systems, process inputs, and personnel tend to change over time. Without continual tuning and retraining, algorithm learnings will eventually become distorted, leading to faulty conclusions and higher risks and costs from missed opportunities to improve reliability, efficiency, and performance.

The return on investment will deteriorate as well when there is a lack of awareness or resources to proactively manage ML models. Selecting a predictive analytics solution that includes oversight by data scientists and subject matter experts — at no extra cost — provides protection from excessive lifecycle costs of the advanced technology.

Change is inevitable

To optimize predictive analytics and avoid undesirable surprises, attention must be paid to how conditions and variables evolve after ML models are trained and implemented. Examples of what may be encountered include:

Incomplete training: Systems may have a set of significantly different modes of operation. For example, expected system performance is radically different in startup, shutdown, and steady state modes. Models will decline in prediction quality when they enter a mode for which they have not been trained. Proactive retraining is needed to ensure the models become relevant and accurate.

Unexpected inputs: An ML model that is not trained on all possible inputs will not predict as well when something unexpected is encountered. For example, if an oil and gas plant’s ML model is trained on a certain composition of crude, or a coal-fired power plant’s model is trained on a certain composition of coal, and somewhere upstream another form of the product enters the process, the model may need to be retrained to accommodate the new input.

Evolving priorities: Predictive models trained with a high sensitivity to precision will make fewer but more accurate predictions. On the other hand, those trained with a high sensitivity to recall will predict a wider range of problems, though the risk of false positives and irrelevant results is greater. When sensitivity targets shift over time, the model needs to be retrained to the new objectives, even if it is technically predicting the same physical variables.

Altered systems: Adding new equipment to a system, such as an additional chiller, or upgrading an asset to one with a different power curve, are examples of system alterations that require algorithm retraining. Likewise, if a sensor breaks or is removed, or a new type of sensor is added, retraining becomes necessary.

New personnel: A new plant manager or engineer may bring a different operating philosophy to the plant. If they are used to operating with setpoints that are different than how a model was originally trained, it must be retrained to reflect the changes.

Managing change is optimal

Having ongoing ML oversight services included with your AI solution will help to prevent unexpected risks and decreasing ROI. Tignis’ physics-driven analytics solution includes subject matter expertise for the life of the subscription, at no extra cost. Not only do Tignis ML models automatically retrain on new data as it arrives, but our data science experts will ensure your objectives are always being met by continuously monitoring the performance of your connected systems, and meeting regularly with your teams to discuss model training and optimization opportunities.

Though the enduring need for model tuning is not always well communicated, you need not be caught unaware. Remember that machine learning is never done, and you will be on the right track for analytics optimization.